Working on many machine learning (ML) projects for many different clients, and discussing the nature of ML project management with other peers and ML specialists we recognized there is sometimes a gap between the expectations of the decision makers who are interested in implementing ML in their business and what can actually be done, at what time range and how much effort and cost it might take. So, we decided to write this guide for managers, CEOs, VP Products, business analysts, startup founders, and, in general anyone who is thinking of hiring in-house or outside help to develop ML algorithms to solve a problem.

In this guide you will learn:

- What to expect when embarking on a machine learning project in your company?

- What you should be wary of?

- Where are the opportunities using machine learning?

- What efforts will be required on your team’s part to make it succeed?

- How much is a machine learning project going to cost you?

- How to recognize good ML engineers?

Some definitions which we will require

- Forms of machine learning – The industry trends these days define several different forms of machine learning:

- Deep learning, or, neural network – a form in which a computer is programmed to run in a similar fashion to neuron cells in a biological brain. There is a network of computer programmed neurons connected to each other, created a graph, on one end the network receives an input and on the other end emits an output

- Statistical analysis – These are the old school techniques, for example – regression or anova analysis. Today, in the industry, they are usually considered part of ML.

- Machine learning refers many times to more sophisticated methods of statistical analysis, methods such as SVM, decision trees, clustering algorithms and more. You do not need to know these specific keywords in order to understand the rest of this guide 😊

- Feature – Single data point of a sample, or, in other words a specific characteristic of a data sample. Examples:

- Size of an object – Width in meter^2, height in centimeter, etc..

- Categorical measure of an object – Male\Female, Car\Bus\Bike\Truck, etc..

- Price, for example price of a sale in dollars.

- The color value of one pixel (0,0,0) – RGB with 3 features

- Signal measure in 1 time point – Amplitude of sound signal (1db), etc..

Technical considerations

The TL/DR version

- Rule of thumb – if a human looking at data and can’t recognize a pattern, ML probably won’t also

- Two type of algorithms – requires training or pre-trained

- Unsupervised or anomaly detection algorithms rarely work, unless you have very clean data

- On the other hand, there are simple implementations for group separation for labeled groups

- The more training data the better, the minimal amount of data varies with project requirements and algorithms implemented

- Data formatting, examination and transformation is roughly 70% of the work

- Deep learning won’t solve your problems, unless maybe if you do vision\signal processing

- Killer feature is more important than the algorithm

Rule of thumb – if a human looking at data and can’t recognize a pattern, ML probably won’t also

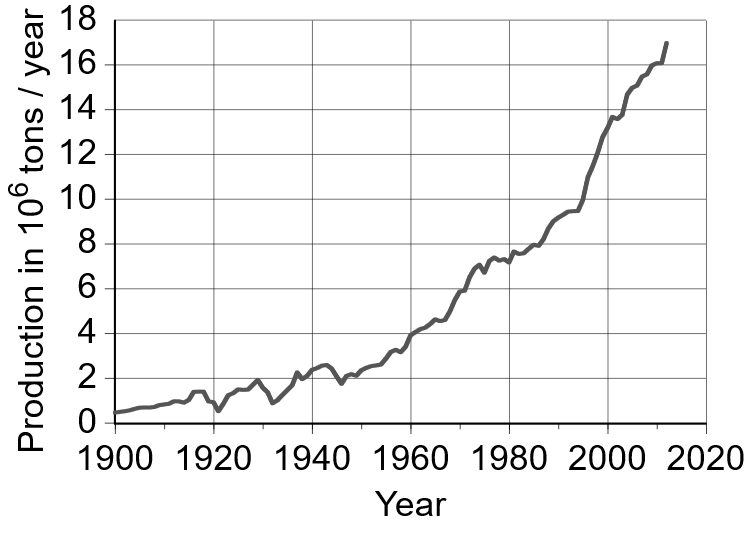

Before running a machine learning algorithm, it helps if you can visualize the data, see repeating patterns with your own eyes. This could be in the form of graphs, showing a clearly visible trend line, such as on the right.

Some of our clients sometimes develop and in-house rule-based decision machine. For example, if a customer bought a dining table, they would recognize he might also be interested in a chair and put in a rule in the software to offer chairs to customers buying tables. This is good, this means there are indeed repeating patterns in the data.

Machine learning could help you find more patterns you have missed. Or, refine pattern definitions you found, making the pattern recognition more accurate and more actionable for you.

Train or use pre-trained algorithm

ML is known for training periods of the algorithm – You supply your own data, or some other existing dataset and you train the algorithm to recognize patterns in the data you are interested in. Sometimes people use the phrases “supervised” and “non-supervised”:

Supervised means that your training data is classified to different groups. For example, if you develop an algorithm to recognize between photos and cats and dogs, in the supervised method, you will have photos of cats, labeled as cats, and images of dogs, labeled as dogs, and you will train an ML algorithm to recognize cats and dogs based on this training data.

Unsupervised means you have training data, but it is not classified. In the cats and dogs example, you will have photos of cats and dogs but no labeling of which is cat and which is dog. You only know there are 2 possibilities for the label of the photo. In this case, you will train the algorithm to distinguish two groups in your training data.

Notice, both supervised and unsupervised methods require training an algorithm.

The second option is using a pre-trained algorithm, or an algorithm that does not require training. These are pre-prepared algorithms, ready to use. For example, there are already existing algorithms for image recognition, which were trained using huge academia datasets to recognize between different objects in an image. Another example, albeit not exactly machine learning, but an AI method, is textual index and search software. These programs come prepared with the ability to analyze text in different languages, without requiring you to supply training text samples.

The pre-trained approach is more generic and could be implemented in every company quite easily. The issues with this approach are:

- Licensing – sometimes you are unable to use a pre-trained algorithm, because it was trained using proprietary data that have license limitations for usage.

- The prediction\estimation\classification quality of the algorithm on your data might be worse, because the algorithm was not trained on your data.

- Pre-trained algorithms exist only for a specific set of problems and specific constructs of data, many times you might not be able to find a pre-trained algorithm which complies to your exact needs. In contrast, training your own algorithm is very generic and can be used for any required data analysis question.

It is always best to train the algorithm using your own specific and custom data. But, many times, companies don’t have enough data for training, so they are forced to use pre-trained algorithms.

It is worth mentioning, if an implementation of an algorithm worked for another company or in another research, doing the same for your company might require a completely different project, it all depends on the dataset you have.

Unsupervised or anomaly detection algorithms rarely work, unless you have very clean data

These methods are sort of ’machine learning magic’ and should be treated as such. Trying to take a bunch of data and put into an algorithm hoping something good will come out, is, as expected, unique.

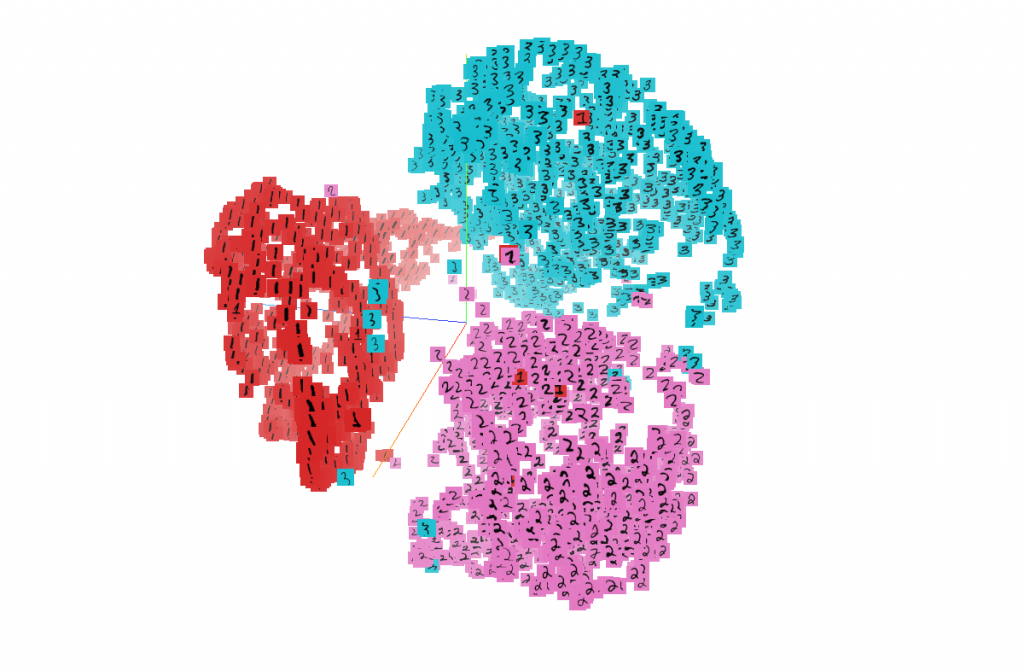

Unsupervised learning can work if there is a true different between the different groups we are trying to identify, and this difference clearly shows in the features (Refer to the rule of thumb above about human looking at data). Also, usually we have to know in advance how many different groups we are expected to encounter in the data.

An interesting example of clustering images of handwritten digits to different groups in an unsupervised manner can be seen here:

Taken from this tensorflow link

Anomaly detection could work, if there are enough samples of the unique occasions we are trying to identify, and these samples are indeed much more different than the standard (non-anomalous) situation.

On the other hand, there are simple implementations for group separation for labeled groups

Sometimes it could be quite easy to have samples from different groups and train a machine learning algorithm to recognize a new sample from an unknown group. For example, in psychological behavior studies these techniques have been used by statisticians for many years in order to recognize correlations between different behaviors, combinations of behaviors and group belonging.

How much data will you require for training?

More is always better in this case. Still, to be more concrete, it really changes with the problem you are trying to solve. This is usually one of the parts of the algorithm implementation – examining the specific data at hand, the specific problem to be solved and seeing what algorithms can work and how much data is required.

A common issue arises when there is a large data set, but it is not spread evenly. For example, when a company wants to recognize what features lead to more customer sales conversions, the company might have information about thousands of potential customers but only a handful of customers who bought. In this case, it might be hard if not impossible to run any type of machine learning algorithm to bring meaningful results. In another example, recognizing heavy machinery malfunctions based on sound, pressure measures, temperature or other physical measures, might be impossible if there are a handful of samples when the machine was not working right, and the rest of the samples are when the machine was working good.

Some benchmarks from our own personal experience:

- In forecasting and trend analysis, to be able to recognize a seasonal (yearly) trend, a minimum f 2 years of samples is required. This is because broadly 1 year is used for baseline estimation and another year for trend estimation.

- In 3D modeling (you can check out our medium post as an example) – At least 5,000 3D models of a specific object are required (5,000 models of chairs)

- In video analysis – When we were working on our lip-reading startup, we saw that we need a minimum of 70,000 hours video of people talking (This was about 10 terabytes of data) to get our neural network to learn anything.

It’s not just how much data, it is also how is it formatted

Many times, an ML project starts off with cleaning the provided data, changing it, simplifying its’ structure. In this stage a lot of bugs and issues are found in the data. There might have been an unexpected issue with how the data was originally prepared or saved, or another issue with how the data was exported. All these take time and effort and have to be done very carefully. Because, otherwise we might train an algorithm on completely incorrect information, not get any good results and blame it on the algorithm instead of the original training data.

In projects, 70% of the actual work is at this stage of data re-formatting and testing.

Deep learning won’t solve your problems, unless maybe if you do vision\signal processing

Sometimes clients start talking to us and they will talk about different things they saw online with deep learning and neural networks that they would like us to implement for them. Deep learning is just another type machine learning algorithm to try out. It usually takes more time and effort to construct and optimize a deep learning algorithm to solve a problem than to use something simpler, such as logistic regression or regular regression (depends on the question at hand). In most cases, it is an overkill to implement deep learning. The cases for which deep learning is a must is for technically hard situations, such as image analysis, text analysis, signal processing, biological data analysis or other types of projects in which features are complex and usually there are thousands of features per data sample.

Killer feature is more important than the algorithm

Many times, it is more worthwhile to work on the features, test them out, try to come up with new features. Usually if you have a good feature the simplest algorithm will be enough. For example, in the above example of a customer buying a table will also buy a chair, if you have the feature – the category of the last item sold (in our case, table), then, even a simple logistic regression model might be able to recognize that the next category sold will be chair.

This means that for an ML engineer, it is more important to very good at simple data analysis and data engineering than at knowing all the different ML algorithms and implementing them.

Timelines, pricing and recruiting considerations

Initial data examination takes a minimum of 1 hour for the simplest of cases to two weeks of full-time work.

Time it takes to research specific algorithms or new algorithms released from academia:

- At least 4 days to go over the most relevant research papers and information.

- Between half a day to 2 weeks to implement basic open source code, if it exists.

- Customizing the algorithms or training on your data might take months, depending on the complexity of the problem at hand, the quality of the training data (or, lack of it) and required KPIs.

In some cases, such as anomaly detection, it is impossible to define actionable technical KPIs because it is never clear how accurate even the best algorithm might turn out.

Custom made projects can be expensive, prices range from 120$ to 300$ per hour work.

Usually starting off small, with quick simple wins and then progressing if you see the value in implementing machine learning is the way to go. It is advisable not to spend months developing before you see any progress. Try 1-2 months, focus on an achievable short-term goal, maybe even a simple report, with simple tools. If this works out, then advance to something more sophisticated.

How to recognize the right ML company\consultant\hire

- They tell you the same things written above.

- They start off with showing you graphs and dashboards over your data instead of diving into developing.

- They say no if they recognize the dataset is not good enough, and they give you tips on how you could still do machine learning if you are interested, what you need to focus on.

- They are expensive.

- They explain basic concepts in simple to understand language to help you understand the project, its’ scope and its’ limitations.

If you have any questions or interest in doing a machine learning project, feel free to contact us on the section to the left of this page.

Also, we have launched datask.co – You ask the data, we answer. This is a machine learning as a service product for those who are interested in implementing machine learning but don’t have the time or resources to do it themselves. Be sure to check it out! 🙂

3 comments

[…] his 15 years of machine learning experience to teach the technology. If you’re interested in Project Management for Machine Learning, or were looking for a Deep Learning Dictionary, or want to write your own patent, Naftaliev is a […]

[…] uses his 15 years of machine learning experience to teach the technology. If you’re interested in Project Management for Machine Learning, or were looking for a Deep Learning Dictionary, or want to write your own patent, Naftaliev is a […]

[…] uses his 15 years of machine learning experience to teach the technology. If you’re interested in Project Management for Machine Learning , or were looking for a Deep Learning Dictionary , or want to write your own patent , Naftaliev is […]